How to Tune an LLM: Hyperparameters and Metrics

Last time we figured out how to prepare data for our language model, and now we can initialize our BERT model for fine-tuning. But before diving into a bunch of deep learning jargon on hyperparameters and metrics, I thought it would be useful to build our own mental model on what goes on during the fine-tuning process.

Backpropagation

When training a language model, we're basically teaching the computer how to make guesses on an exam we wrote. We let the computer make guesses, observe how stupid it is, and fix it up to make better guesses.

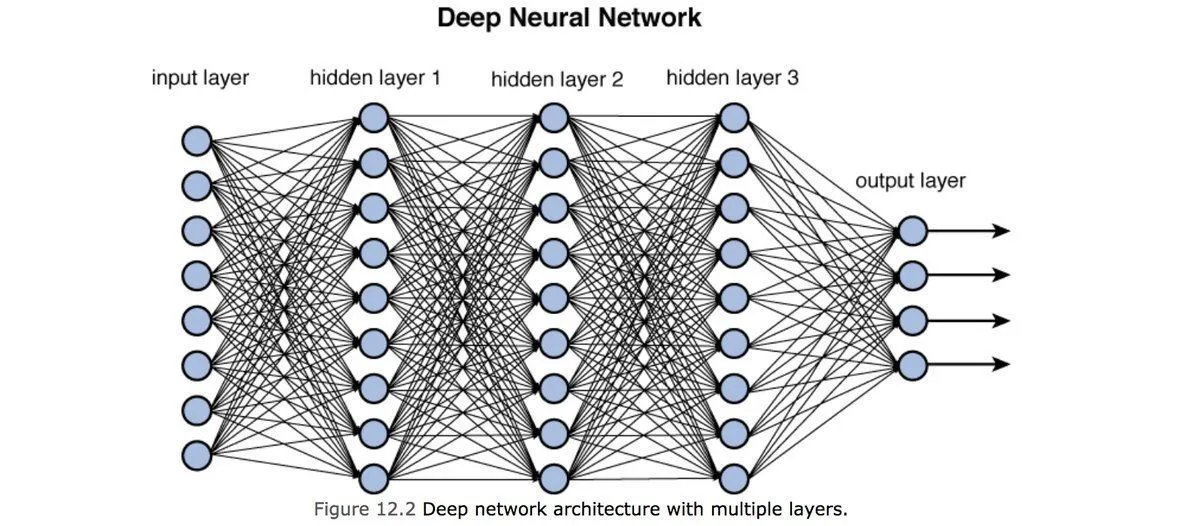

We conduct this teaching process on a model (a.k.a. a neural network) through the following process:

- We assign weights (a.k.a. probabilities) to our preprocessed training data and dump it on our model. The training inputs travel through multiple layers of computation nodes, each with its own set of weights, until it reaches the set of target outcomes we've defined. This step is called the forward pass.

- Next, we calculate how different the model outcome is compared to the outcome we wanted. We can call this difference loss (a.k.a error rate).

- We then backpropagate from the weights of our output nodes to our input nodes to figure out how much each weight in the network contributed to the loss.

- Now we change up the input weights we've assigned in step 1, attribute some bias, and hope the model will do better next time in the next training session.

These four steps we described are commonly represented by a complicated diagram that looks like this:

If you want to go further into the computation behind the backpropagation step like a real pro, I'd watch this video.

Hyperparameters

Aside from the input weights we must assign, there are also other parameters we can control to influence the machine-learning process. Here are four that seemed the most important:

learning_rate

The learning rate determines the pace we force our computer through the mundane training process we described earlier. We want to pick the right rate to help our model minimize loss.

- Too small of a learning rate can lead to the model getting stuck. If you have a large dataset and limited computing resources the model can literally "give up" on you.

- Picking a large learning rate can create an unstable training/tuning process. As a result, the model will make suboptimal ("bad") guesses.

weight_decay

The weight decay is a multiplier to discourage weights from getting too large. This parameter is mainly used to prevent overfitting. An excessive weight decay can lead to underfitting, where all the outcomes trend towards a uniform distribution.

batch_size

The batch size refers to the number of training examples utilized in one iteration of the training process. You can read more about the reasoning behind this parameter here.

num_train_epochs

The number of times you want to process your training data through your model.

Metrics

An important metric to track is the accuracy_score. The accuracy score tells us the number of times our model made a correct guess.

I also found this neat helper from scikit-learn called precision_recall_fscore_support.

The metrics are documented as follows:

The

precisionis the ratiotp / (tp + fp)where

tpis the number of true positives

fpthe number of false positivesThe precision is intuitively the ability of the classifier not to label a negative sample as positive

The

recallis the ratiotp / (tp + fn)where

tpis the number of true positives

fnthe number of false negativesThe recall is intuitively the ability of the classifier to find all the positive samples

The

F-betascore can be interpreted as a weighted harmonic mean of the precision and recall, where an F-beta score reaches its best value at 1 and worst score at 0The

F-betascore weights recall more than precision by a factor of beta

beta == 1.0means recall and precision are equally importantWhen

beta == 1.0, the harmonic mean ofprecisionandrecallis calculated as:F1 = 2 * (precision * recall) / (precision + recall)

The

supportis the number of occurrences of each class in our reference outcomes

Probability Normalization

The metrics from scikit-learn seem great! But I had no idea how to actually apply the function ...

I flipped through some people's machine-learning homework and found an example of evaluation metrics computed with precision_recall_fscore_support:

import numpy as np

from transformers import EvalPrediction

from sklearn.metrics import precision_recall_fscore_support

def compute_metrics(eval_pred: EvalPrediction):

logits, targets = eval_pred

predictions = np.argmax(logits, axis=-1)

precision, recall, f1, support = precision_recall_fscore_support(targets, predictions)

return {"precision": precision, "recall": recall, "f1": f1, "support": support}

With the help of my friend ChatGPT, I found out that logits refer to the raw, unnormalized predictions generated by a neural network before they are transformed into probabilities.

ChatGPT also gave me a step-by-step breakdown on what np.argmax(logits, axis=-1) did:

For more on probability normalization, I would also look into functions such as softmax, sparsemax, etc.

Now I think we're finally ready to do some fine-tuning!